Vid4

Vid4 вђ Usastreams You signed in with another tab or window. reload to refresh your session. you signed out in another tab or window. reload to refresh your session. you switched accounts on another tab or window. 2018. 27. tecogan. 25.57. learning temporal coherence via self supervision for gan based video generation. enter. 2018. the current state of the art on vid4 4x upscaling is evtexture . see a full comparison of 27 papers with code.

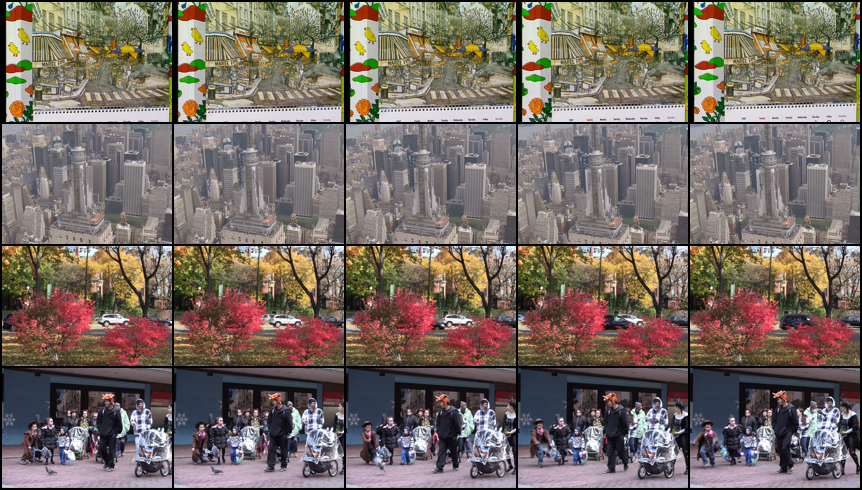

Vid4 4x Upscaling Benchmark Video Super Resolution Papers With Code The vid4 dataset is generally used for testing video super resolution. it consists of four sequences: walk (740x480, 47 frames), foliage (740x480, 49 frames), city (704x576, 34 frames), and calendar (720x576, 41 frames). Video super resolution is a computer vision task that aims to increase the resolution of a video sequence, typically from lower to higher resolutions. the goal is to generate high resolution video frames from low resolution input, improving the overall quality of the video. ( image credit: detail revealing deep video super resolution ). Vid4 is a benchmark dataset that is often used for vsr tasks and consists of four video sequences. tables 2 and 3 show the results of the comparison on the vid4 dataset. as can be seen from these tables, our model is better than other methods in most cases, and both psnr and ssim values have been significantly improved. Unified framework: this repo provides a unified framework for various state of the art dl based vsr methods, such as vespcn, sofvsr, frvsr, tecogan and our egvsr.; multiple test datasets: this repo offers three types of video datasets for testing, i.e., standard test dataset vid4, tos3 used in tecogan and our new dataset gvt72 (selected from vimeo site and including more scenes).

建议收藏 入门超分辨率必须了解的数据集 知乎 Vid4 is a benchmark dataset that is often used for vsr tasks and consists of four video sequences. tables 2 and 3 show the results of the comparison on the vid4 dataset. as can be seen from these tables, our model is better than other methods in most cases, and both psnr and ssim values have been significantly improved. Unified framework: this repo provides a unified framework for various state of the art dl based vsr methods, such as vespcn, sofvsr, frvsr, tecogan and our egvsr.; multiple test datasets: this repo offers three types of video datasets for testing, i.e., standard test dataset vid4, tos3 used in tecogan and our new dataset gvt72 (selected from vimeo site and including more scenes). The vid4 dataset is usually used in literature for comparing, but it only consists of four video sequences, has limited ability to assess the relative merits of competing approaches. each sequence in this set has limited motions and little inter frame variations. Real time video super resolution with spatio temporal networks and motion compensation. jose caballero, christian ledig, andrew aitken, alejandro acosta, johannes totz, zehan wang, wenzhe shi. convolutional neural networks have enabled accurate image super resolution in real time. however, recent attempts to benefit from temporal correlations.

Vid4 4x Upscaling Benchmark Video Frame Interpolation Papers With The vid4 dataset is usually used in literature for comparing, but it only consists of four video sequences, has limited ability to assess the relative merits of competing approaches. each sequence in this set has limited motions and little inter frame variations. Real time video super resolution with spatio temporal networks and motion compensation. jose caballero, christian ledig, andrew aitken, alejandro acosta, johannes totz, zehan wang, wenzhe shi. convolutional neural networks have enabled accurate image super resolution in real time. however, recent attempts to benefit from temporal correlations.

Comments are closed.