Solved When Minimizing The Sum Of Squared Errors Chegg

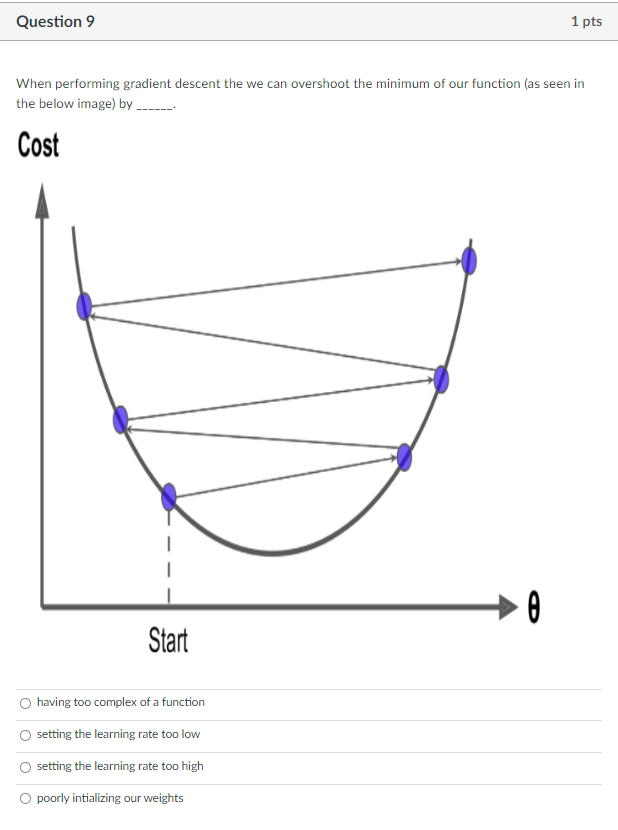

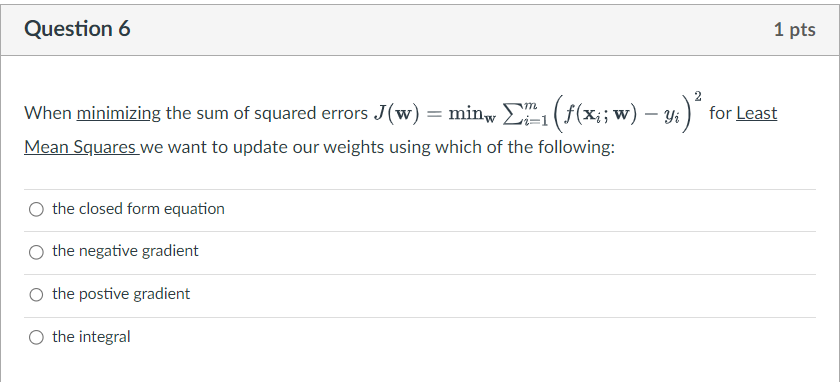

Solved When Minimizing The Sum Of Squared Errors Chegg Step 1. when minimizing the sum of squared errors j (w)=minw∑i=1m (f (xi;w)−yi)2 for least mean squares we want to update our weights using which of the following: the closed form equation the negative gradient the postive gradient the integral online learning is when we update our model based on all data samples none of the above one data. Answer to as an objective, minimizing the sum of squared errors. your solution’s ready to go! our expert help has broken down your problem into an easy to learn solution you can count on.

Solved When Minimizing The Sum Of Squared Errors Chegg Ordinary least squares regression is defined as minimizing the sum of squared errors. so after doing this regression (ols) then what is the purpose of optimizing sse (or mse, rmse etc.) if linear regression already revolves around optimizing the position of the best fit line that minimizes the sum of squared errors?. If you expand this sum, you get $$ n \hat \beta 0 \sum {i=1}^n y i \hat \beta 1 \sum {i=1}^n x i.$$ the terms $\sum y i$ and $\sum x i$ are fixed constants that do not depend on the coefficients. so if i want this sum of errors to equal zero, there are two free variables for this single equation, and there are in general infinitely many solutions as a result. Lastly, there is the case of e1 = 0.5 and e2 = 0.2. e1 is further away to start, but when you square it 0.25 is compared with 0.4. anyway, just wondering why we do sum of squares erie minimization rather than absolute value. 0.52 = 0.25> 0.04 =0.22 0.5 2 = 0.25> 0.04 = 0.2 2 monotonic transformations preserve order. Rule 1: the power rule. example: \[\begin{align*} f(x) &= x^{6} \\ f'(x) &= 6*x^{6 1} \\ &=6x^{5} \end{align*}\] a second example can be plotted in r.the function is.

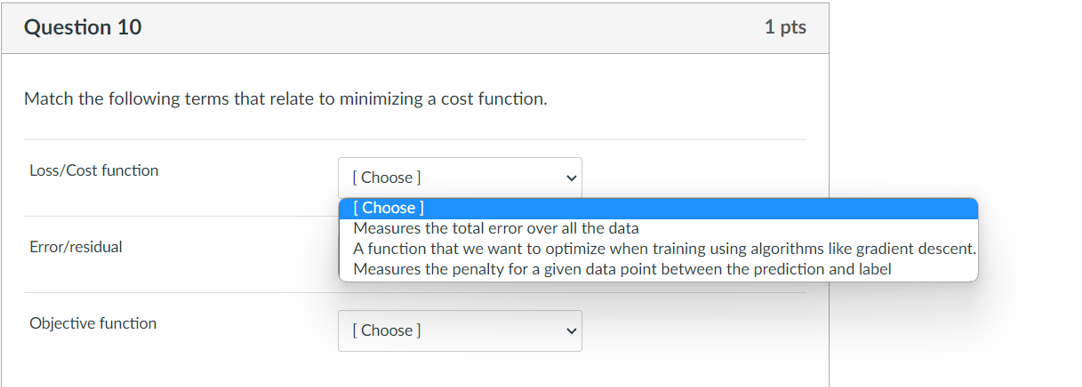

Solved When Minimizing The Sum Of Squared Errors Chegg Lastly, there is the case of e1 = 0.5 and e2 = 0.2. e1 is further away to start, but when you square it 0.25 is compared with 0.4. anyway, just wondering why we do sum of squares erie minimization rather than absolute value. 0.52 = 0.25> 0.04 =0.22 0.5 2 = 0.25> 0.04 = 0.2 2 monotonic transformations preserve order. Rule 1: the power rule. example: \[\begin{align*} f(x) &= x^{6} \\ f'(x) &= 6*x^{6 1} \\ &=6x^{5} \end{align*}\] a second example can be plotted in r.the function is. 1. sum of squares total (sst) – the sum of squared differences between individual data points (yi) and the mean of the response variable (y). sst = Σ (yi – y)2. 2. sum of squares regression (ssr) – the sum of squared differences between predicted data points (ŷi) and the mean of the response variable (y). ssr = Σ (ŷi – y)2. Question: we discussed "minimizing the sum of squared errors." what is meant by this?we want to minimize the portion of the world that is random.we want to minimize the amount of variance we have explained.we want to find a line that best summarizes the relationship between two variables.we want to maximize the amount of the world that is random.

Solved The Calculation Of вђњsum Of Squared Errors Is Chegg 1. sum of squares total (sst) – the sum of squared differences between individual data points (yi) and the mean of the response variable (y). sst = Σ (yi – y)2. 2. sum of squares regression (ssr) – the sum of squared differences between predicted data points (ŷi) and the mean of the response variable (y). ssr = Σ (ŷi – y)2. Question: we discussed "minimizing the sum of squared errors." what is meant by this?we want to minimize the portion of the world that is random.we want to minimize the amount of variance we have explained.we want to find a line that best summarizes the relationship between two variables.we want to maximize the amount of the world that is random.

Comments are closed.