Probabilistic Analysis 4 Using Linearity Of Expectation

Probabilistic Analysis 4 Using Linearity Of Expectation Youtube In this video we analyze another simple algorithm and examine how we can use the linearity property to simplify our analysis. 3.2: more on expectation slides (google drive)alex tsunvideo ( ) 3.2.1 linearity of expectation right now, the only way you’ve learned to compute expectation is by rst computing the pmf of a random variable p x(k) and using the formula e[x] = p k2 x k p x(k) which is just a weighted sum of the possible values of x.

L05 11 Linearity Of Expectations Youtube 4, x = 1 with probability 2, and x = 2 with probability 1 4.1 the expectation e[x] of a random variable x is defined as the sum, over all valuesx can take on, of each value times the probability of that value. that is, the expectation is a weighted average. so, for example, the expectation of our variable x above is:. We often use these three steps to solve complicated expectations. decompose: finding the right way to decompose the random variable into sum of simple random variables. = 1 2 ⋯ . loe: apply linearity of expectation. = 1 2 ⋯ . conquer: compute the expectation of each. Linearity of expectation is the property that the expected value of the sum of random variables is equal to the sum of their individual expected values, regardless of whether they are independent. the expected value of a random variable is essentially a weighted average of possible outcomes. we are often interested in the expected value of a sum of random variables. for example, suppose we are. Fact 1. if x and y are real valued random variables in the same probability space, then [x y] = [x] e. [y]. e. the amazing thing is that linearity of expectation even works when the random variables are dependent. this allows us to calcu late expectation very easily. the first example we saw of this is buffon’s needle problem.

Probability Proof For Linearity Of Expectation Question Mathematics Linearity of expectation is the property that the expected value of the sum of random variables is equal to the sum of their individual expected values, regardless of whether they are independent. the expected value of a random variable is essentially a weighted average of possible outcomes. we are often interested in the expected value of a sum of random variables. for example, suppose we are. Fact 1. if x and y are real valued random variables in the same probability space, then [x y] = [x] e. [y]. e. the amazing thing is that linearity of expectation even works when the random variables are dependent. this allows us to calcu late expectation very easily. the first example we saw of this is buffon’s needle problem. The expectation, however, is easy to calculate using linearity of expectation : exp[x] = np since it’s a sum of nbernoulli’s and each bernoulli has expectation p. c. geometric random variable. a random variable x˘geom(p) with parameter p, is the num ber of times a coin whose probability of heads is pneeds to be tossed before we see the first. Hint: express this complicated random variable as a sum of indicator random variables (i.e., that only take on the values 0 or 1), and use linearity of expectation. mcdonald’s decides to give a pokemon toy with every happy meal.

L06 8 Linearity Of Expectations The Mean Of The Binomial Youtube The expectation, however, is easy to calculate using linearity of expectation : exp[x] = np since it’s a sum of nbernoulli’s and each bernoulli has expectation p. c. geometric random variable. a random variable x˘geom(p) with parameter p, is the num ber of times a coin whose probability of heads is pneeds to be tossed before we see the first. Hint: express this complicated random variable as a sum of indicator random variables (i.e., that only take on the values 0 or 1), and use linearity of expectation. mcdonald’s decides to give a pokemon toy with every happy meal.

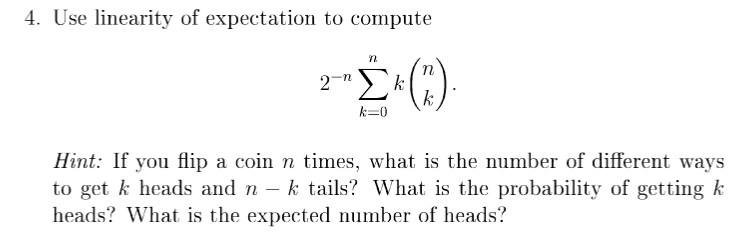

Solved 4 Use Linearity Of Expectation To Compute Hint If Chegg

Comments are closed.