Policy Gradient Methods Reinforcement Learning Part 6

Reinforcement Learning Explained Visually Part 6 Policy Gradientsођ Finally, since policy based methods find the policy directly, they are usually more efficient than value based methods, in terms of training time. policy gradient ensures adequate exploration. in contrast to value based solutions which use an implicit ε greedy policy, the policy gradient learns its policy as it goes. The machine learning consultancy: truetheta.iojoin my email list to get educational and useful articles (and nothing else!): mailchi.mp truet.

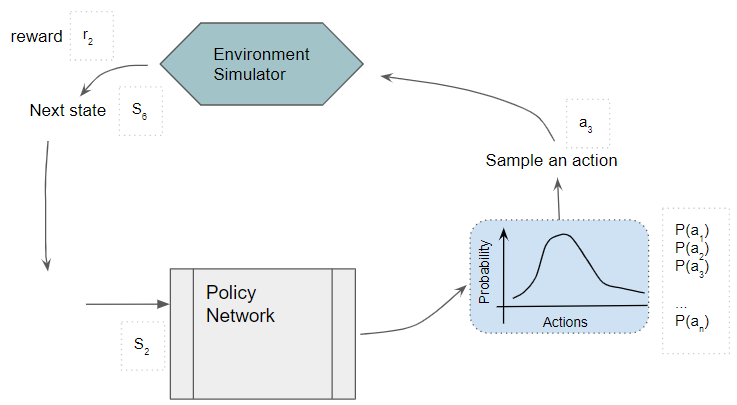

Policy Gradient Methods Reinforcement Learning Part 6 Youtube The policy gradient algorithm (simplified) looks like this: now that we got the big picture, let’s dive deeper into policy gradient methods. diving deeper into policy gradient methods. we have our stochastic policy π \pi π which has a parameter θ \theta θ. this π \pi π, given a state, outputs a probability distribution of actions. Policy gradient. the goal of reinforcement learning is to find an optimal behavior strategy for the agent to obtain optimal rewards. the policy gradient methods target at modeling and optimizing the policy directly. the policy is usually modeled with a parameterized function respect to $\theta$, $\pi \theta (a \vert s)$. These methods are less common in deep reinforcement learning but can be useful when flexibility and adaptability are needed. 2.1.3: deterministic policies. while policy gradient methods are typically associated with stochastic policies, deterministic policies can also be used, especially in continuous action spaces. This is the sixth article in my series on reinforcement learning (rl). we now have a good understanding of the concepts that form the building blocks of an rl problem, and the techniques used to solve them. we have also taken a detailed look at two value based algorithms — q learning algorithm and deep q networks (dqn), which was our first step into deep reinforcement learning.

Policy Gradient Theorem Explained Reinforcement Learning Youtube These methods are less common in deep reinforcement learning but can be useful when flexibility and adaptability are needed. 2.1.3: deterministic policies. while policy gradient methods are typically associated with stochastic policies, deterministic policies can also be used, especially in continuous action spaces. This is the sixth article in my series on reinforcement learning (rl). we now have a good understanding of the concepts that form the building blocks of an rl problem, and the techniques used to solve them. we have also taken a detailed look at two value based algorithms — q learning algorithm and deep q networks (dqn), which was our first step into deep reinforcement learning. Policy gradients. the goal of gradient ascent is to find weights of a policy function that maximises the expected return. this is done iteratively by calculating the gradient from some data and updating the weights of the policy. the expected value of a policy π θ with parameters θ is defined as: j (θ) = v π θ (s 0). The policy gradient method is also the “actor” part of actor critic methods (check out my post on actor critic methods), so understanding it is foundational to studying reinforcement learning!.

Comments are closed.