Introduction To Apache Spark Class Preview

Introduction To Apache Spark Introduction to apache spark is designed to introduce you to one of the most important big data technologies on the market, apache spark. you will start by l. Get spark from the downloads page of the project website. this documentation is for spark version 4.0.0 preview1. spark uses hadoop’s client libraries for hdfs and yarn. downloads are pre packaged for a handful of popular hadoop versions. users can also download a “hadoop free” binary and run spark with any hadoop version by augmenting.

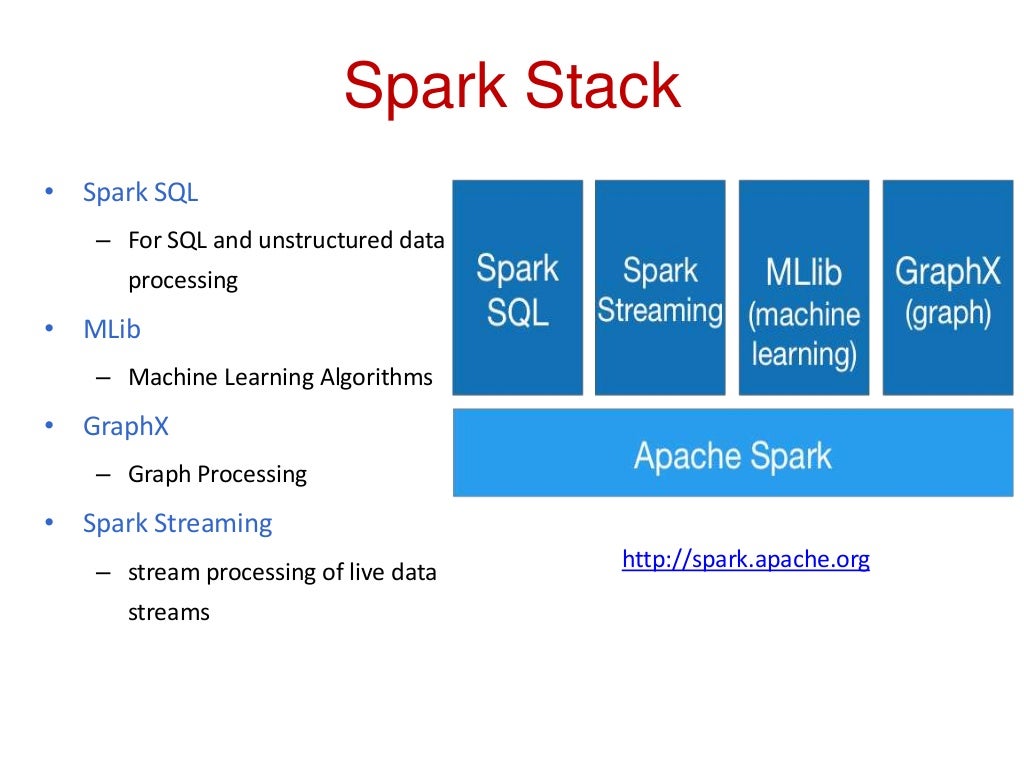

Quick Introduction To Apache Spark Youtube Introduction. apache spark is an open source cluster computing framework. it provides elegant development apis for scala, java, python, and r that allow developers to execute a variety of data intensive workloads across diverse data sources including hdfs, cassandra, hbase, s3 etc. historically, hadoop’s mapreduce prooved to be inefficient. Apache spark™ documentation. apache spark. documentation. setup instructions, programming guides, and other documentation are available for each stable version of spark below: documentation for preview releases: the documentation linked to above covers getting started with spark, as well the built in components mllib, spark streaming, and graphx. Quick start. this tutorial provides a quick introduction to using spark. we will first introduce the api through spark’s interactive shell (in python or scala), then show how to write applications in java, scala, and python. to follow along with this guide, first, download a packaged release of spark from the spark website. What is apache spark? an introduction. spark is an apache project advertised as “lightning fast cluster computing”. it has a thriving open source community and is the most active apache project at the moment. spark provides a faster and more general data processing platform. spark lets you run programs up to 100x faster in memory, or 10x.

Introduction To Apache Spark Ppt Quick start. this tutorial provides a quick introduction to using spark. we will first introduce the api through spark’s interactive shell (in python or scala), then show how to write applications in java, scala, and python. to follow along with this guide, first, download a packaged release of spark from the spark website. What is apache spark? an introduction. spark is an apache project advertised as “lightning fast cluster computing”. it has a thriving open source community and is the most active apache project at the moment. spark provides a faster and more general data processing platform. spark lets you run programs up to 100x faster in memory, or 10x. Apache spark 3.5 is a framework that is supported in scala, python, r programming, and java. below are different implementations of spark. spark – default interface for scala and java. pyspark – python interface for spark. sparklyr – r interface for spark. examples explained in this spark tutorial are with scala, and the same is also. Apache spark — it’s a lightning fast cluster computing tool. spark runs applications up to 100x faster in memory and 10x faster on disk than hadoop by reducing the number of read write cycles to disk and storing intermediate data in memory. hadoop mapreduce — mapreduce reads and writes from disk, which slows down the processing speed and.

Introduction To Apache Spark Part 1 Evolution History Youtube Apache spark 3.5 is a framework that is supported in scala, python, r programming, and java. below are different implementations of spark. spark – default interface for scala and java. pyspark – python interface for spark. sparklyr – r interface for spark. examples explained in this spark tutorial are with scala, and the same is also. Apache spark — it’s a lightning fast cluster computing tool. spark runs applications up to 100x faster in memory and 10x faster on disk than hadoop by reducing the number of read write cycles to disk and storing intermediate data in memory. hadoop mapreduce — mapreduce reads and writes from disk, which slows down the processing speed and.

Ppt Introduction To Apache Spark Powerpoint Presentation Free

Comments are closed.