Intro To Sentence Embeddings With Transformers

Intro To Sentence Embeddings With Transformers Youtube Transformers have wholly rebuilt the landscape of natural language processing (nlp). before transformers, we had okay translation and language classification. Sentence sentence transformers (also known as sbert) have a special training technique focusing on yielding high quality sentence embeddings. just as in the tl;dr section of this blog post, let’s use the all minilm l6 v2 model. in the beginning, we used the sentence transformers library, which is a high level wrapper library around.

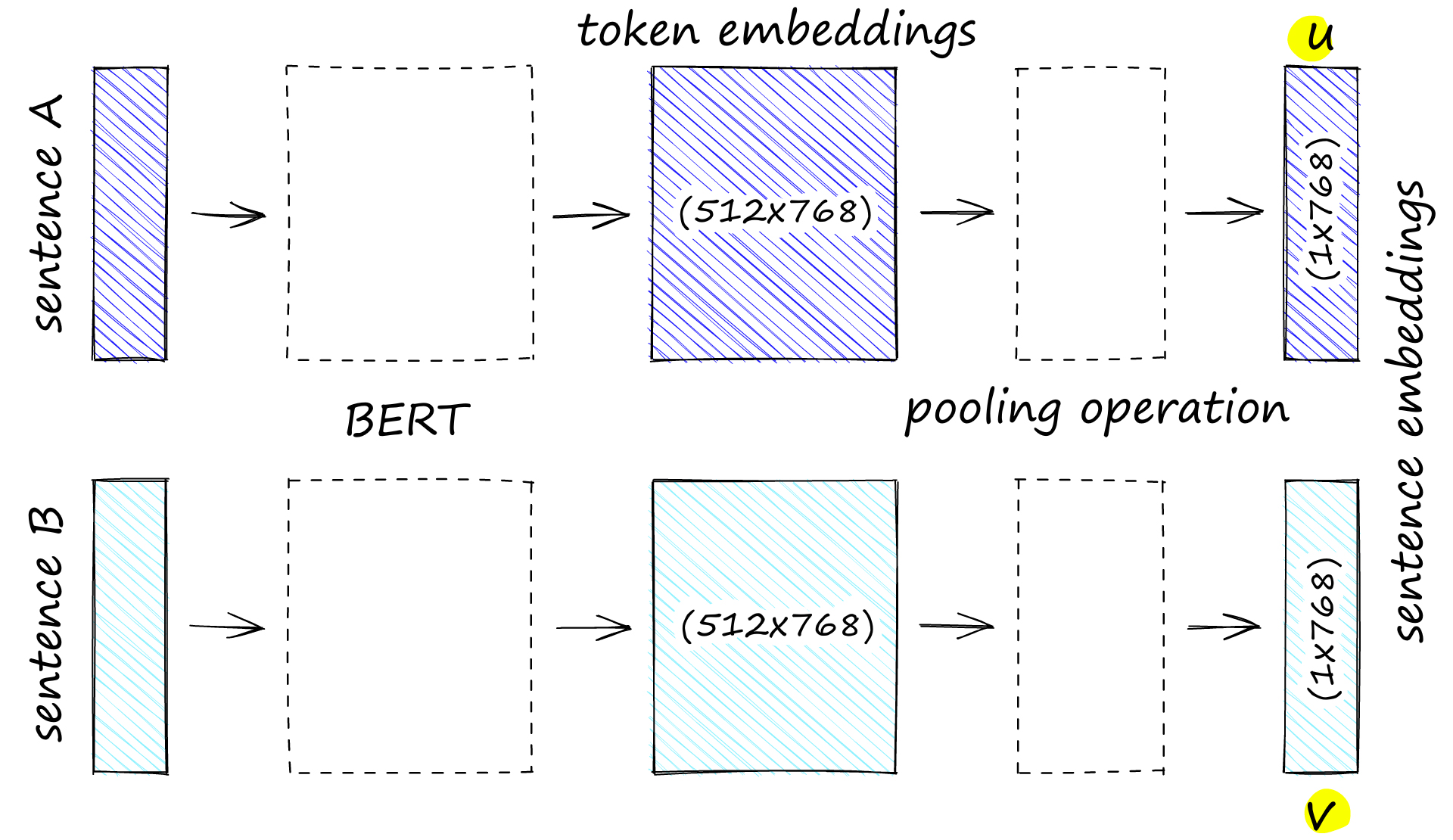

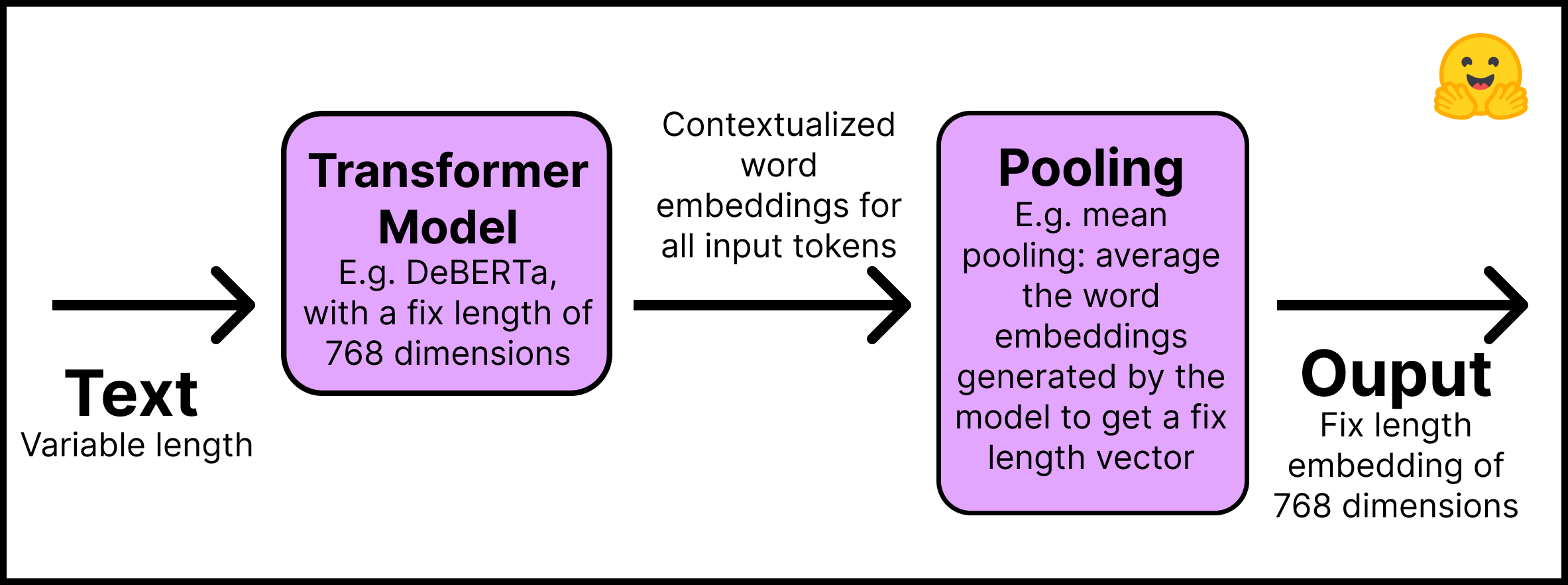

Sentence Transformers Sentence Embedding Sentence Similarity Image by the author. sentencetransformers is a python framework for state of the art sentence, text, and image embeddings. embeddings can be computed for 100 languages and they can be easily used. Sentencetransformers documentation ¶. sentencetransformers documentation. sentence transformers (a.k.a. sbert) is the go to python module for accessing, using, and training state of the art text and image embedding models. it can be used to compute embeddings using sentence transformer models (quickstart) or to calculate similarity scores. There is also a short section about generating sentence embeddings from bert word embeddings, focusing specifically on the average based transformation technique. let us start with a short spark nlp introduction and then discuss the details of producing sentence embeddings with transformers with some solid results. introduction to spark nlp. This is because the embeddings are taken from a hidden layer in the transformer model, the dimension is the same as the number of neurons of that hidden layer. since the embeddings are learned by a transformer model, the two example comments in the previous section are now similar. i am not going into the details of this in this blog post.

The Transformer Neural Network Architecture There is also a short section about generating sentence embeddings from bert word embeddings, focusing specifically on the average based transformation technique. let us start with a short spark nlp introduction and then discuss the details of producing sentence embeddings with transformers with some solid results. introduction to spark nlp. This is because the embeddings are taken from a hidden layer in the transformer model, the dimension is the same as the number of neurons of that hidden layer. since the embeddings are learned by a transformer model, the two example comments in the previous section are now similar. i am not going into the details of this in this blog post. Pip install u sentence transformers. the usage is as simple as: from sentence transformers import sentencetransformer. model = sentencetransformer('paraphrase minilm l6 v2') # sentences we want to encode. example: sentence = ['this framework generates embeddings for each input sentence'] # sentences are encoded by calling model.encode(). Characteristics of sentence transformer (a.k.a bi encoder) models: calculates a fixed size vector representation (embedding) given texts or images. embedding calculation is often efficient, embedding similarity calculation is very fast. applicable for a wide range of tasks, such as semantic textual similarity, semantic search, clustering.

Sentence Transformers And Embeddings Pinecone Pip install u sentence transformers. the usage is as simple as: from sentence transformers import sentencetransformer. model = sentencetransformer('paraphrase minilm l6 v2') # sentences we want to encode. example: sentence = ['this framework generates embeddings for each input sentence'] # sentences are encoded by calling model.encode(). Characteristics of sentence transformer (a.k.a bi encoder) models: calculates a fixed size vector representation (embedding) given texts or images. embedding calculation is often efficient, embedding similarity calculation is very fast. applicable for a wide range of tasks, such as semantic textual similarity, semantic search, clustering.

Train And Fine Tune Sentence Transformers Models

Comments are closed.