Implementation Of Word Embedding With Keras

Implementation Of Word Embedding With Keras Found 400000 word vectors. now, let's prepare a corresponding embedding matrix that we can use in a keras embedding layer. it's a simple numpy matrix where entry at index i is the pre trained vector for the word of index i in our vectorizer 's vocabulary. num tokens = len(voc) 2 embedding dim = 100 hits = 0 misses = 0 # prepare embedding. 1. e = embedding(200, 32, input length=50) the embedding layer has weights that are learned. if you save your model to file, this will include weights for the embedding layer. the output of the embedding layer is a 2d vector with one embedding for each word in the input sequence of words (input document).

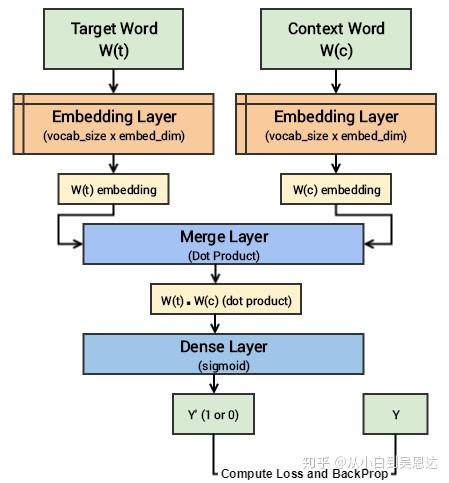

Word2vec Implementation With Keras 2 0 Munira Syed Word2vec is not a singular algorithm, rather, it is a family of model architectures and optimizations that can be used to learn word embeddings from large datasets. embeddings learned through word2vec have proven to be successful on a variety of downstream natural language processing tasks. note: this tutorial is based on efficient estimation. Keras support two types of apis: sequential and functional. in this section we will see how word embeddings are used with keras sequential api. in the next section, i will explain how to implement the same model via the keras functional api. to implement word embeddings, the keras library contains a layer called embedding(). the embedding layer. # embed a 1,000 word vocabulary into 5 dimensions. embedding layer = tf. keras. layers. embedding (1000, 5) when you create an embedding layer, the weights for the embedding are randomly initialized (just like any other layer). during training, they are gradually adjusted via backpropagation. We will be converting the text into numbers where each word will be represented by an array of numbers that can of different length depending upon the glove embedding you select. glove embeddings.

Github Cyberzhg Keras Word Char Embd Concatenate Word And Character # embed a 1,000 word vocabulary into 5 dimensions. embedding layer = tf. keras. layers. embedding (1000, 5) when you create an embedding layer, the weights for the embedding are randomly initialized (just like any other layer). during training, they are gradually adjusted via backpropagation. We will be converting the text into numbers where each word will be represented by an array of numbers that can of different length depending upon the glove embedding you select. glove embeddings. The word embedding matrix is simply to encode words (which is in the order of 10,000 or them) into a shorter vector (e.g., 50 floats). we do not want two unrelated, distinct words to share the same vector. hence the embedding matrix is best to be randomized, but also guaranteed not to “collide”. From keras.layers import merge from keras.layers.core import dense, reshape from keras.layers.embeddings import embedding from keras.models import sequential # build skip gram architecture word model = sequential word model. add (embedding (vocab size, embed size, embeddings initializer = "glorot uniform", input length = 1)) word model. add.

Comments are closed.