Github Mikeroyal Apache Spark Guide Apache Spark Guide

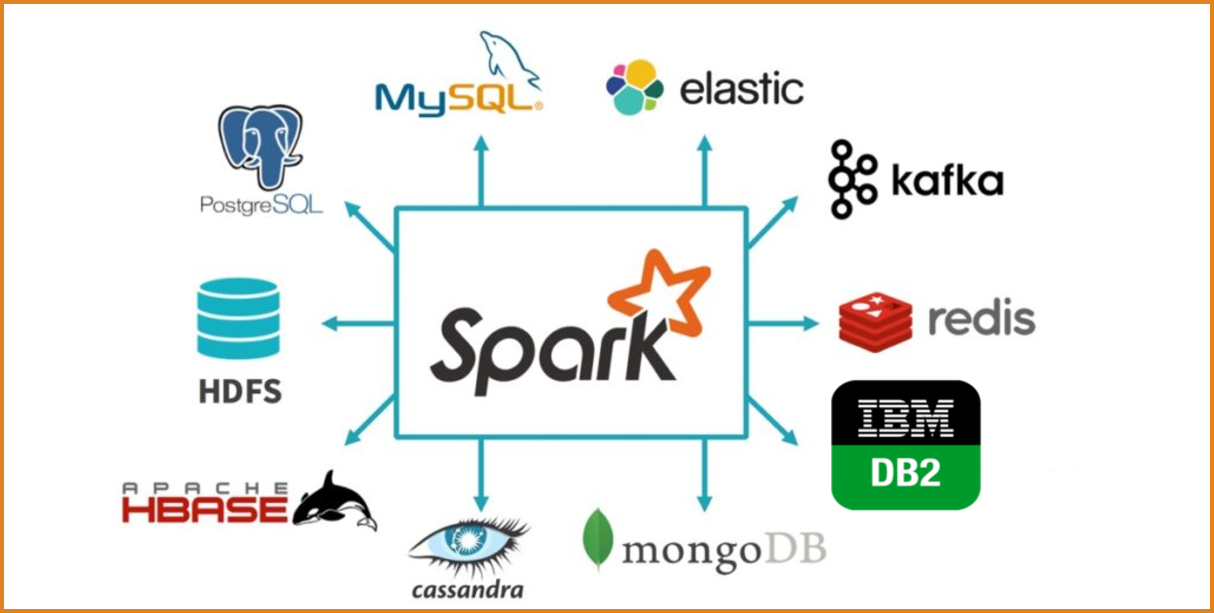

Github Mikeroyal Apache Spark Guide Apache Spark Guide Apache spark™ is a unified analytics engine for large scale data processing. it provides high level apis in scala, java, python, and r, and an optimized engine that supports general computation graphs for data analysis. it also supports a rich set of higher level tools including spark sql for sql and dataframes, mllib for machine learning. Back to the top. apache ignite® is a distributed database for high performance computing with in memory speed. ignite's main goal is to provide performance and scalability by partitioning and distributing data within a cluster. the cluster provides very fast data processing. getting strated with apache ignite.

Github Mikeroyal Apache Spark Guide Apache Spark Guide Azure databricks is a fast and collaborative apache spark based big data analytics service designed for data science and data engineering. azure databricks, sets up your apache spark environment in minutes, autoscale, and collaborate on shared projects in an interactive workspace. Quick start. this tutorial provides a quick introduction to using spark. we will first introduce the api through spark’s interactive shell (in python or scala), then show how to write applications in java, scala, and python. to follow along with this guide, first, download a packaged release of spark from the spark website. Apache spark™ documentation. apache spark. documentation. setup instructions, programming guides, and other documentation are available for each stable version of spark below: documentation for preview releases: the documentation linked to above covers getting started with spark, as well the built in components mllib, spark streaming, and graphx. Java. spark 3.5.2 works with python 3.8 . it can use the standard cpython interpreter, so c libraries like numpy can be used. it also works with pypy 7.3.6 . spark applications in python can either be run with the bin spark submit script which includes spark at runtime, or by including it in your setup.py as:.

Github Mikeroyal Apache Spark Guide Apache Spark Guide Apache spark™ documentation. apache spark. documentation. setup instructions, programming guides, and other documentation are available for each stable version of spark below: documentation for preview releases: the documentation linked to above covers getting started with spark, as well the built in components mllib, spark streaming, and graphx. Java. spark 3.5.2 works with python 3.8 . it can use the standard cpython interpreter, so c libraries like numpy can be used. it also works with pypy 7.3.6 . spark applications in python can either be run with the bin spark submit script which includes spark at runtime, or by including it in your setup.py as:. Apache spark 3.5 is a framework that is supported in scala, python, r programming, and java. below are different implementations of spark. spark – default interface for scala and java. pyspark – python interface for spark. sparklyr – r interface for spark. examples explained in this spark tutorial are with scala, and the same is also. Apache liminal (incubating) is an end to end platform for data engineers and scientists, allowing them to build, train and deploy machine learning models in a robust and agile way. databand is an observability platform built on top of airflow. datahub is a metadata platform for the modern data stack.

Github Mikeroyal Apache Spark Guide Apache Spark Guide Apache spark 3.5 is a framework that is supported in scala, python, r programming, and java. below are different implementations of spark. spark – default interface for scala and java. pyspark – python interface for spark. sparklyr – r interface for spark. examples explained in this spark tutorial are with scala, and the same is also. Apache liminal (incubating) is an end to end platform for data engineers and scientists, allowing them to build, train and deploy machine learning models in a robust and agile way. databand is an observability platform built on top of airflow. datahub is a metadata platform for the modern data stack.

Github Apache Spark Spark

Comments are closed.