Gaussian Processes Simple Complexities

Gaussian Processes Simple Complexities A gaussian process g g on x x is defined by two functions: the mean function m:x → r m: x → r, and the covariance function k:x ×x → r k: x × x → r. we have seen that a valid covariance function is necessarily a kernel. g g assigns gaussian random variables to elements in x x. Gaussian process de nition a gaussian process is a collection of random variables, where any nite number of them have a joint gaussian distribution. a function fis a gaussian process with mean function m(x) and covariance kernel k(x i;x j if: [f(x 1);:::;f(x n)] ˘n( ;k) i= m(x i) k ij= k(x i;x j) linear basis function models a slightly more.

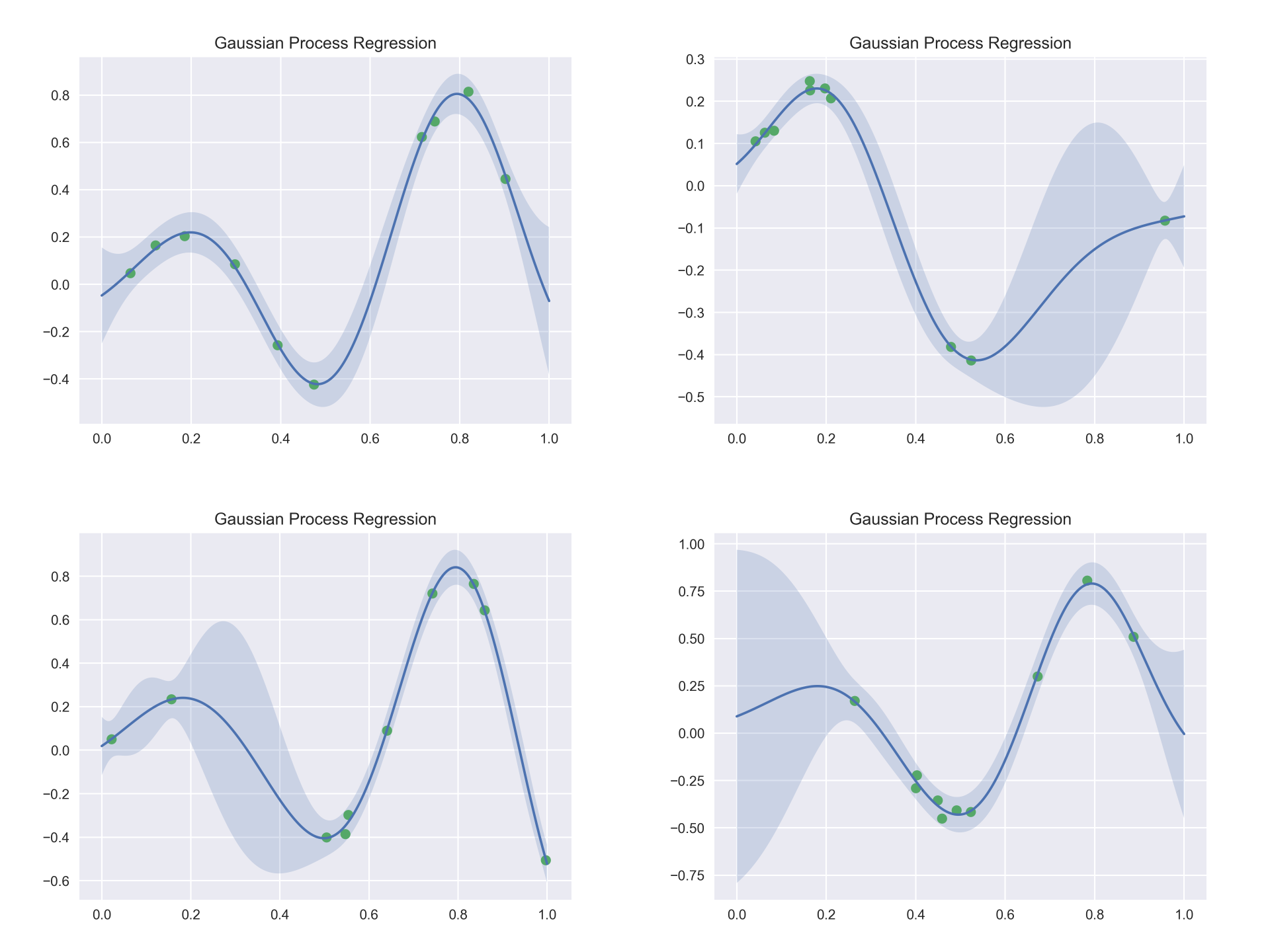

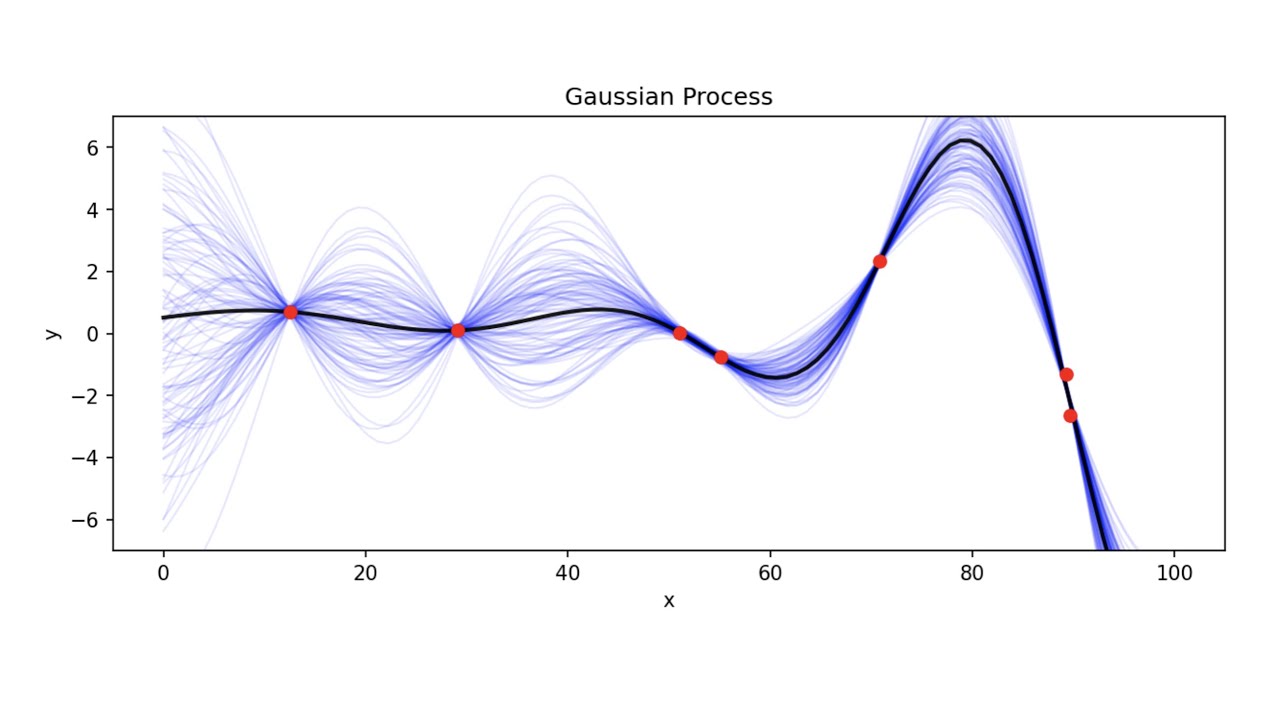

What Is A Gaussian Process Gaussian processes (gp) are a nonparametric supervised learning method used to solve regression and probabilistic classification problems. the advantages of gaussian processes are: the prediction interpolates the observations (at least for regular kernels). the prediction is probabilistic (gaussian) so that one can compute empirical confidence. Gaussian processes (gps) are an incredible class of models. there are very few machine learning algorithms that give you an accurate measure of uncertainty for free while still being super flexible. the problem is, gps are conceptually really difficult to understand. In gaussian processes it is often assumed that = \mu = 0 μ=0, which simplifies the necessary equations for conditioning. we can always assume such a distribution, even if ≠ \mu \neq 0 μ≠0, and add \mu μ back to the resulting function values after the prediction step. this process is also called centering of the data. The process reduces to computing with the related distribution. this is the key to why gaussian processes are feasible. let us look at an example. consider the gaussian process given by: f ∼gp(m,k), where m(x) = 1 4x 2, and k(x,x0) = exp(−1 2(x−x0)2). (2) in order to understand this process we can draw samples from the function f.

Lecture 7 3 Gaussian Processes Youtube In gaussian processes it is often assumed that = \mu = 0 μ=0, which simplifies the necessary equations for conditioning. we can always assume such a distribution, even if ≠ \mu \neq 0 μ≠0, and add \mu μ back to the resulting function values after the prediction step. this process is also called centering of the data. The process reduces to computing with the related distribution. this is the key to why gaussian processes are feasible. let us look at an example. consider the gaussian process given by: f ∼gp(m,k), where m(x) = 1 4x 2, and k(x,x0) = exp(−1 2(x−x0)2). (2) in order to understand this process we can draw samples from the function f. 11. gaussian processes are a powerful algorithm for both regression and classification. their greatest practical advantage is that they can give a reliable estimate of their own uncertainty. by the end of this maths free, high level post i aim to have given you an intuitive idea for what a gaussian process is and what makes them unique among. 4 20 : gaussian process 2 from linear model to gaussian process 2.1 linear model let’s start from a simple linear model: f(x) = a 0 a 1x where xis the input (and a scalar), a 0 is the bias and a 1 is the slope. we put a prior belief on the parameters a 0 and a 1, so a 0;a 1 ˘n(0;1).

Comments are closed.