Example Of Spark Transformations

Apache Spark Transformations Actions Tutorial Cloudduggu In our word count example, we are adding a new column with value 1 for each word, the result of the rdd is pairrddfunctions which contains key value pairs, word of type string as key and 1 of type int as value. # using map() rdd3=rdd2.map(lambda x: (x,1)) collecting and printing rdd3 yields below output. Java. spark 3.5.2 works with python 3.8 . it can use the standard cpython interpreter, so c libraries like numpy can be used. it also works with pypy 7.3.6 . spark applications in python can either be run with the bin spark submit script which includes spark at runtime, or by including it in your setup.py as:.

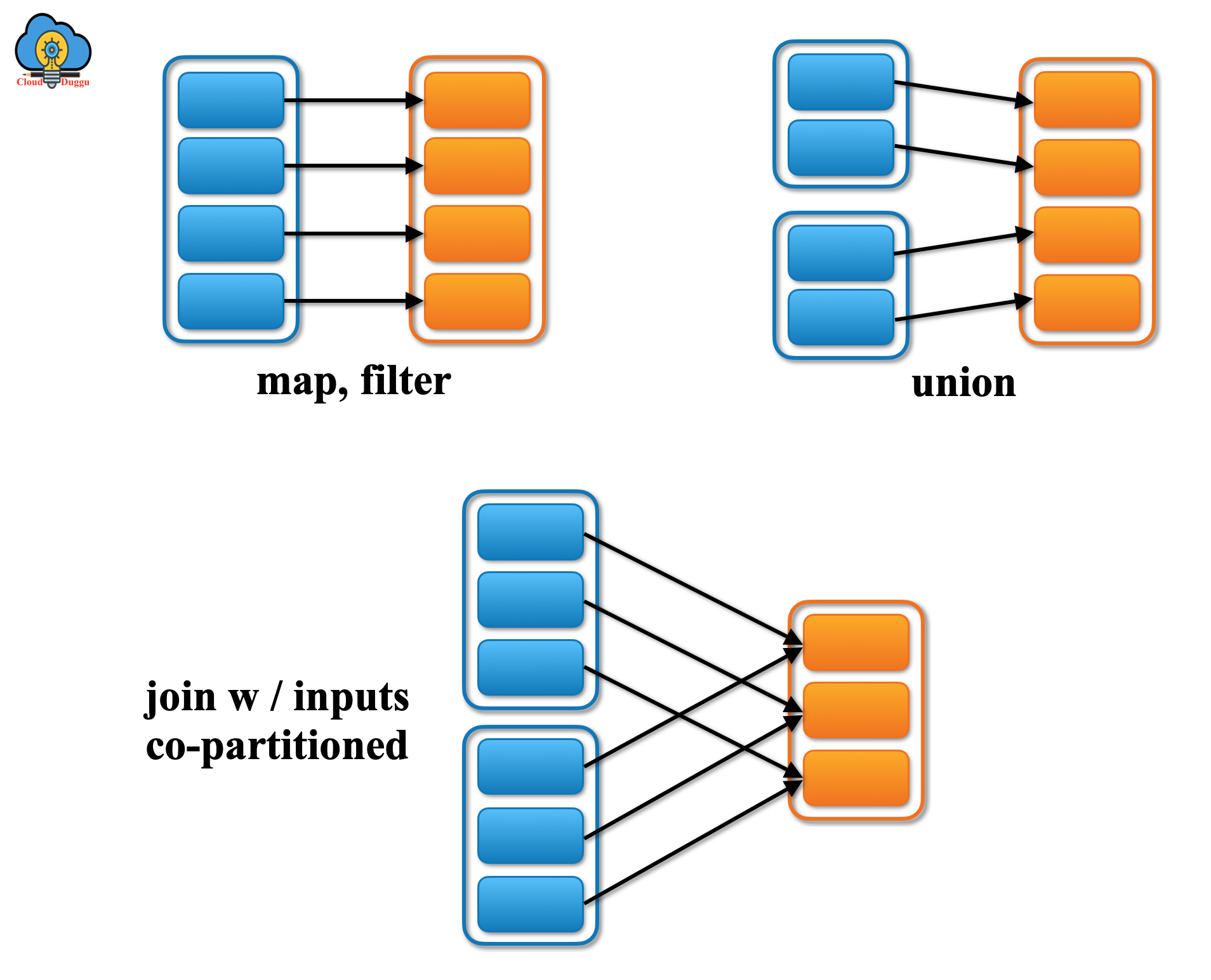

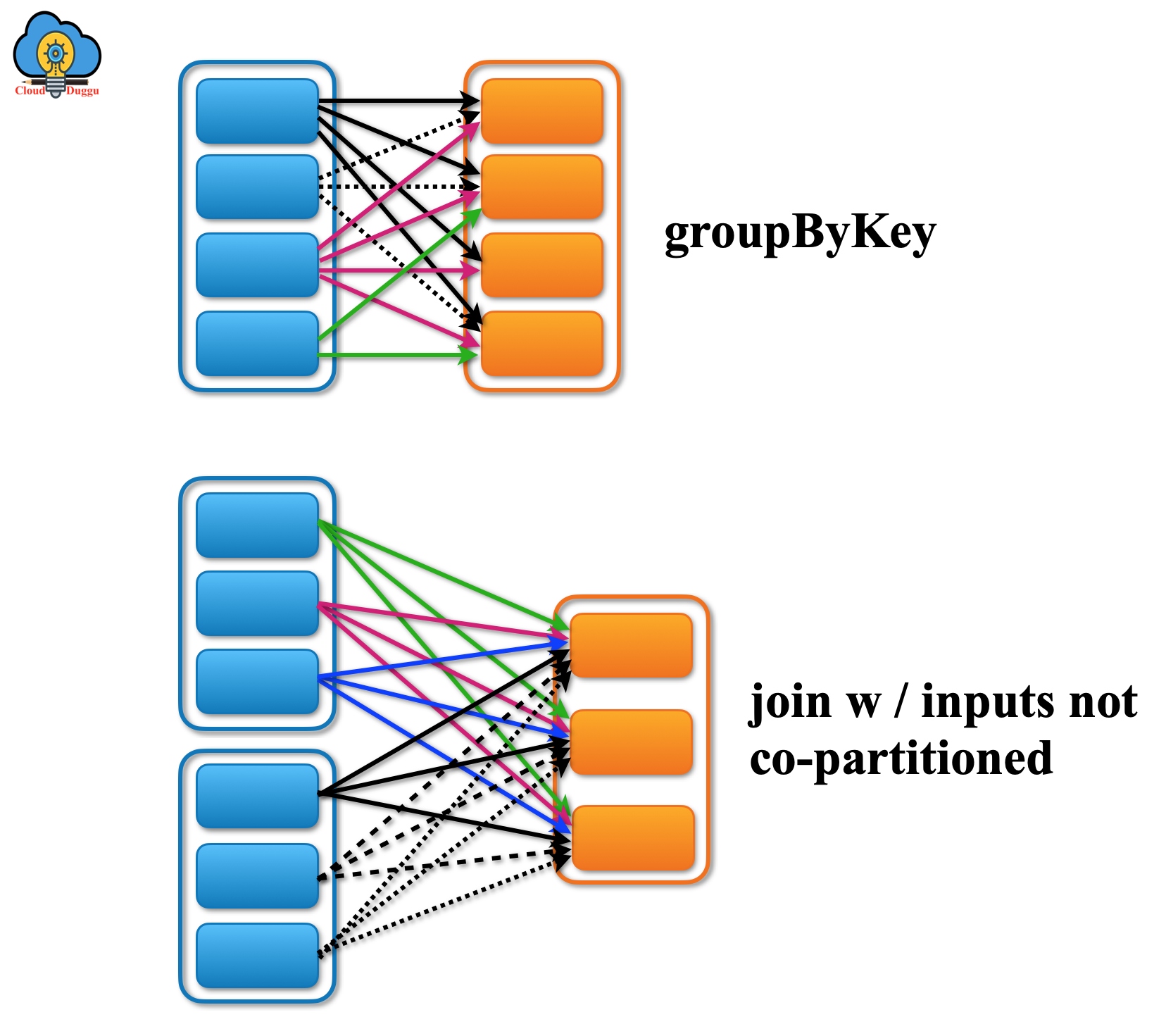

Spark Architecture Part 5 Spark Narrow Wide Transformations Spark Home » apache spark » spark rdd transformations with examples. naveen nelamali. apache spark apache spark rdd member. april 24, 2024. 12 mins read. photo by chris lawton on unsplash. access to this content is reserved for our valued members. Note: as you would probably expect when using python, rdds can hold objects of multiple types because python is dynamically typed. in some of the spark transformation examples in python examples shown below, a csv file is loaded. a snippet of this csv file: year,first name,county,sex,count 2012,dominic,cayuga,m,62012,addison,onondaga,f,14 2012. Pyspark.sql.dataframe.transform() – available since spark 3.0; pyspark.sql.functions.transform() in this article, i will explain the syntax of these two functions and explain with examples. 1. spark rdd operations. two types of apache spark rdd operations are transformations and actions.a transformation is a function that produces new rdd from the existing rdds but when we want to work with the actual dataset, at that point action is performed.

Apache Spark Transformations Actions Tutorial Cloudduggu Pyspark.sql.dataframe.transform() – available since spark 3.0; pyspark.sql.functions.transform() in this article, i will explain the syntax of these two functions and explain with examples. 1. spark rdd operations. two types of apache spark rdd operations are transformations and actions.a transformation is a function that produces new rdd from the existing rdds but when we want to work with the actual dataset, at that point action is performed. Transformation: a spark operation that reads a dataframe, manipulates some of the columns, and returns another dataframe (eventually). examples of transformation include filter and select. figure. Rdds facilitate two categories of operations: the spark rdd operations are transformation and actions. transformations, which create a new dataset from an existing one, and actions, which return a value to the driver program after running a computation on the dataset. key difference between transformations and actions: transformations are.

How Apache юааsparkюабтащs юааtransformationsюаб And Action Worksтаж By Alex Anthony Transformation: a spark operation that reads a dataframe, manipulates some of the columns, and returns another dataframe (eventually). examples of transformation include filter and select. figure. Rdds facilitate two categories of operations: the spark rdd operations are transformation and actions. transformations, which create a new dataset from an existing one, and actions, which return a value to the driver program after running a computation on the dataset. key difference between transformations and actions: transformations are.

Comments are closed.