Data Fitting Basic Curve Fitting Part 3

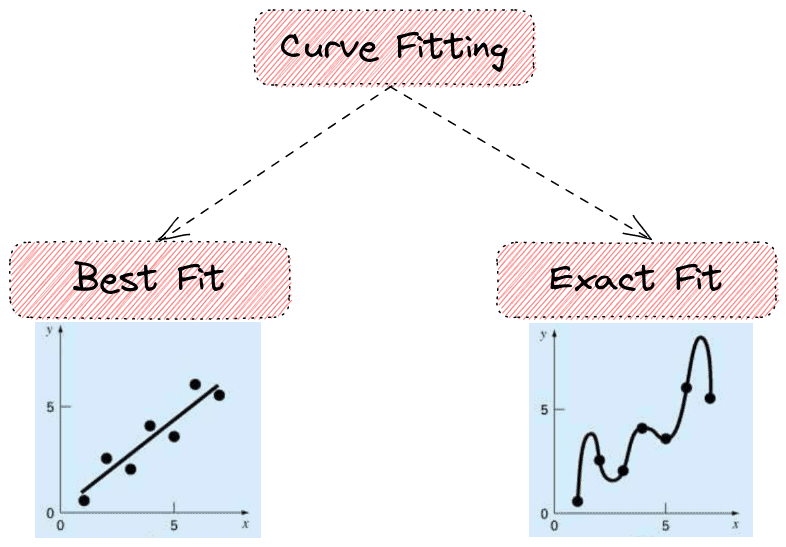

Data Fitting Basic Curve Fitting Part 3 Youtube Data science for biologistsdata fitting: matlab implementationpart 3course website: data4bio instructors:nathan kutz: faculty.washington.edu kutzbing brun. Basic curve fitting# lesson overview# analyzing measured data with a theoretical model is a common task for a scientist and engineer. often we end up “fitting” a dataset to some sort of mathematical function like a line, a sinusoid wave, or an exponentially decaying function.

Data Fitting Basic Curve Fitting Part 3 Youtube Curve fitting with log functions in linear regression. a log transformation allows linear models to fit curves that are otherwise possible only with nonlinear regression. for instance, you can express the nonlinear function: y=e b0 x 1b1 x 2b2. in the linear form: ln y = b 0 b 1 lnx 1 b 2 lnx 2. Data science for biologistsdata fitting: basic curve fittingpart 4course website: data4bio instructors:nathan kutz: faculty.washington.edu kutzbing brunto. Example 1 code snippet. let’s take a look at an example of curve fitting using the least square method. we will start by creating some sample data: x data = np.linspace( 10, 10, 100) y data = 5 * x data ** 3 2 * x data ** 2 10 * x data 5 np.random.normal(scale=100, size=100) in this example, we have generated 100 points that follow a. If you have a set of points f (x,y) > z and you want to find a function that hits them all you could just do a spline. if you have a known function and you want to adjust the parameters to minimize the rms error, just consider x,y a composite object p (e.g., as if it were a complex or a 2 vector) and use an analog of the 2d case on f (p) > z.

Curve Fitting In R With Examples Example 1 code snippet. let’s take a look at an example of curve fitting using the least square method. we will start by creating some sample data: x data = np.linspace( 10, 10, 100) y data = 5 * x data ** 3 2 * x data ** 2 10 * x data 5 np.random.normal(scale=100, size=100) in this example, we have generated 100 points that follow a. If you have a set of points f (x,y) > z and you want to find a function that hits them all you could just do a spline. if you have a known function and you want to adjust the parameters to minimize the rms error, just consider x,y a composite object p (e.g., as if it were a complex or a 2 vector) and use an analog of the 2d case on f (p) > z. Despite its name, you can fit curves using linear regression. the most common method is to include polynomial terms in the linear model. polynomial terms are independent variables that you raise to a power, such as squared or cubed terms. to determine the correct polynomial term to include, simply count the number of bends in the line. Step 1: visualize the problem. first, we’ll plot the points: we note that the points, while scattered, appear to have a linear pattern. clearly, it’s not possible to fit an actual straight line to the points, so we’ll do our best to get as close as possible—using least squares, of course.

Introduction To Curve Fitting Baeldung On Computer Science Despite its name, you can fit curves using linear regression. the most common method is to include polynomial terms in the linear model. polynomial terms are independent variables that you raise to a power, such as squared or cubed terms. to determine the correct polynomial term to include, simply count the number of bends in the line. Step 1: visualize the problem. first, we’ll plot the points: we note that the points, while scattered, appear to have a linear pattern. clearly, it’s not possible to fit an actual straight line to the points, so we’ll do our best to get as close as possible—using least squares, of course.

Comments are closed.