Build Text Classification Model Using Sentence Embedding Sentence

Build Text Classification Model Using Sentence Embedding Sentence Sentencetransformers documentation ¶. sentencetransformers documentation. sentence transformers (a.k.a. sbert) is the go to python module for accessing, using, and training state of the art text and image embedding models. it can be used to compute embeddings using sentence transformer models (quickstart) or to calculate similarity scores. This is a beginner friendly, hands on nlp video. build text classification model using sentence embedding or sentence transformers.learn how to use embedding.

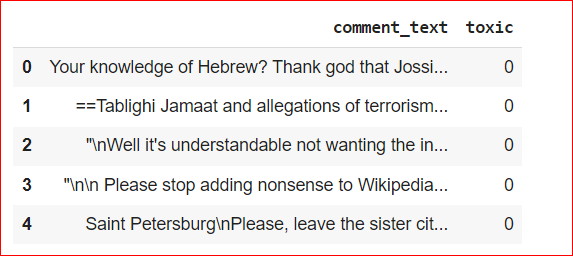

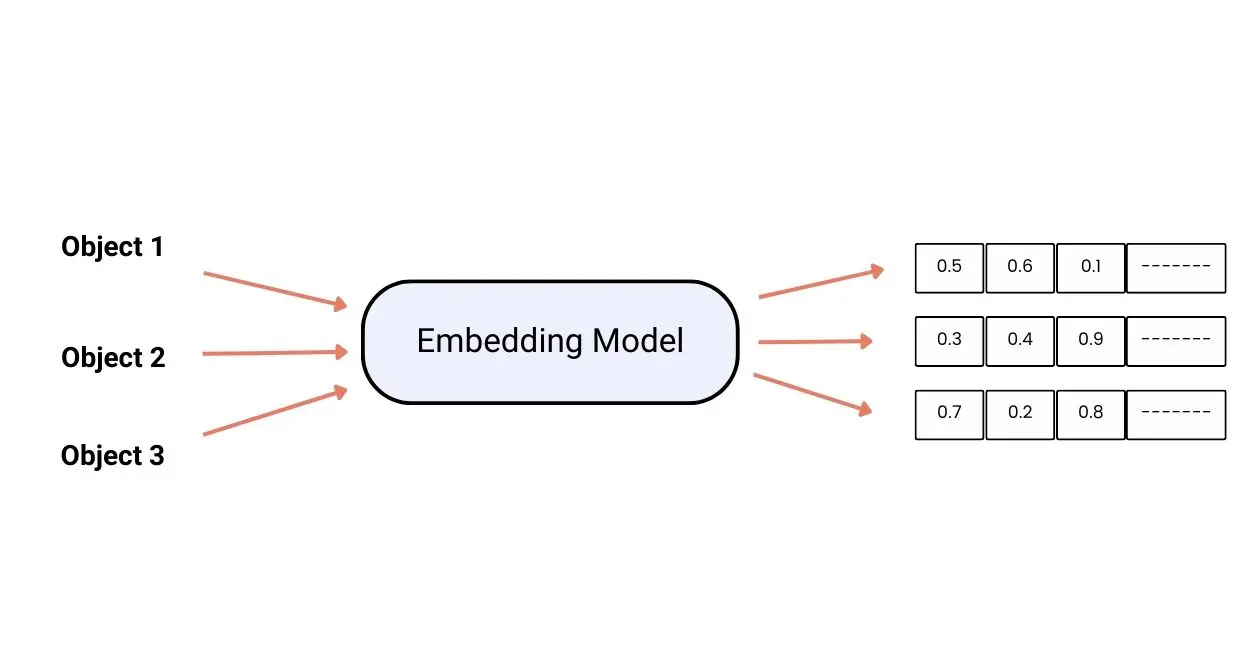

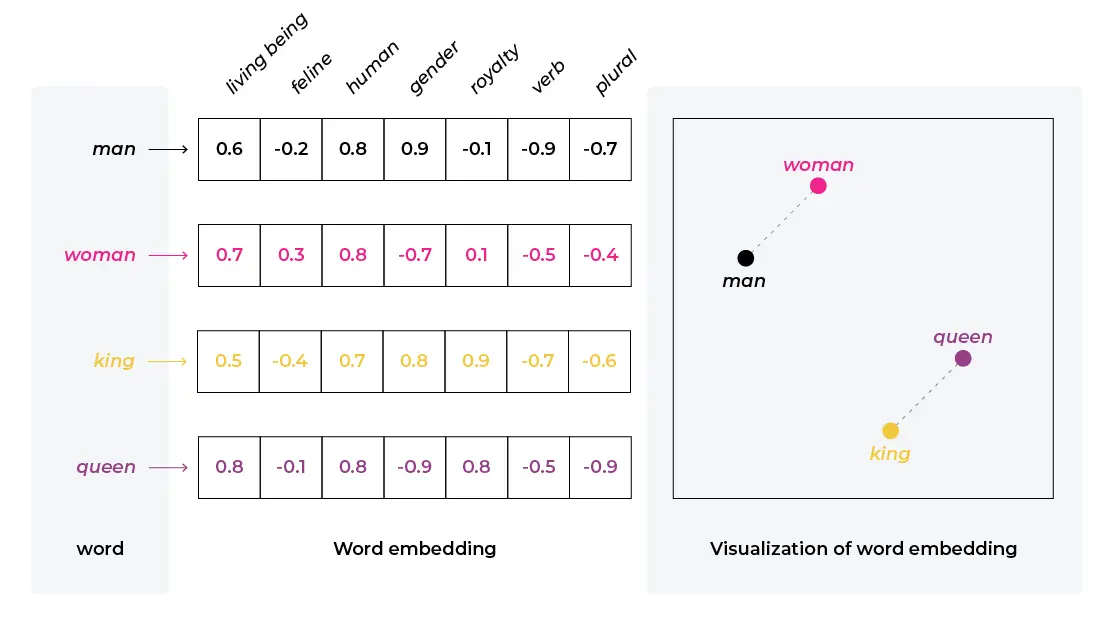

How Do I Load A Pre Trained Word Embedding Model Into An Sentence My Abstract: “freedom is the right to choose. generating embeddings. using a pre trained nlp model, convert these sentences into numerical vectors (embeddings). calculating average locations. find. As you saw before, the model we used, all minilm l6 v2, generates sentence embeddings of 384 values. this is a hyperparameter of the model and can be changed. the larger the embedding size, the more information the embedding can capture. however, larger embeddings are more expensive to compute and store. Bert is very good at learning the meaning of words tokens. but it is not good at learning meaning of sentences. as a result it is not good at certain tasks such as sentence classification, sentence pair wise similarity. since bert produces token embedding, one way to get sentence embedding out of bert is to average the embedding of all tokens. Before building any deep learning model in natural language processing (nlp), text embedding plays a major role. the text embedding converts text (words or sentences) into a numerical vector. basically, the text embedding methods encode words and sentences in fixed length dense vectors to drastically improve the processing of textual data. the.

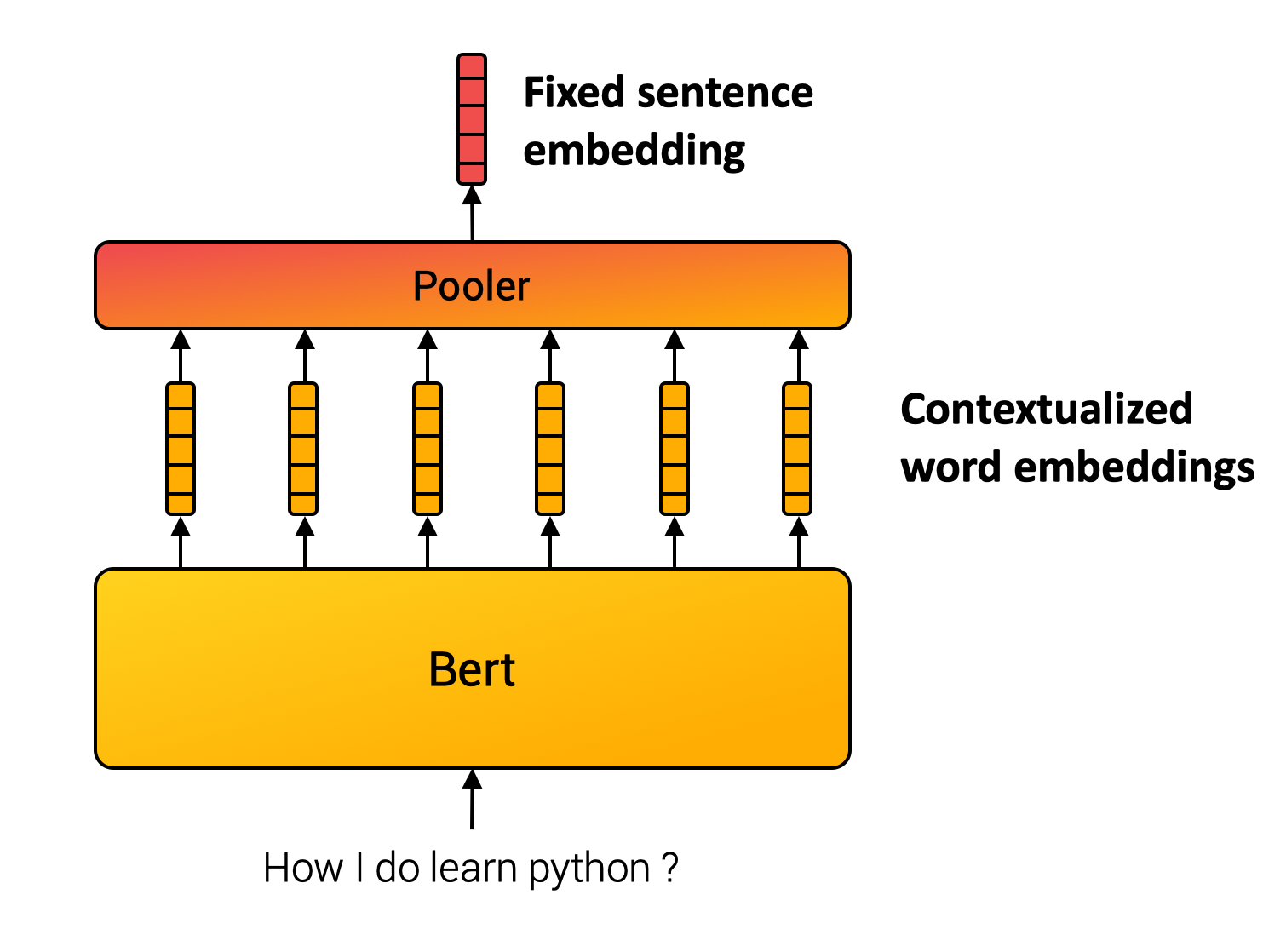

Build Text Classification Model Using Sentence Embedding Sentence Bert is very good at learning the meaning of words tokens. but it is not good at learning meaning of sentences. as a result it is not good at certain tasks such as sentence classification, sentence pair wise similarity. since bert produces token embedding, one way to get sentence embedding out of bert is to average the embedding of all tokens. Before building any deep learning model in natural language processing (nlp), text embedding plays a major role. the text embedding converts text (words or sentences) into a numerical vector. basically, the text embedding methods encode words and sentences in fixed length dense vectors to drastically improve the processing of textual data. the. Build the encoder model. now, we'll build the encoder model that will produce the sentence embeddings. it consists of: a preprocessor layer to tokenize and generate padding masks for the sentences. a backbone model that will generate the contextual representation of each token in the sentence. a mean pooling layer to produce the embeddings. The preprocessing model. text inputs need to be transformed to numeric token ids and arranged in several tensors before being input to bert. tensorflow hub provides a matching preprocessing model for each of the bert models discussed above, which implements this transformation using tf ops from the tf.text library.

Embeddings 101 The Foundation Of Large Language Models Build the encoder model. now, we'll build the encoder model that will produce the sentence embeddings. it consists of: a preprocessor layer to tokenize and generate padding masks for the sentences. a backbone model that will generate the contextual representation of each token in the sentence. a mean pooling layer to produce the embeddings. The preprocessing model. text inputs need to be transformed to numeric token ids and arranged in several tensors before being input to bert. tensorflow hub provides a matching preprocessing model for each of the bert models discussed above, which implements this transformation using tf ops from the tf.text library.

Getting Started With Embeddings Is Easier Than You Think Arize Ai

Comments are closed.