Avro Producer And Consumer With Python Using Confluent Kafka Stackstalk

Avro Producer And Consumer With Python Using Confluent Kafka Stackstalk Avro introduction. for streaming data use cases, avro is a popular data serialization format. avro is an open source data serialization system that helps with data exchange between systems, programming languages, and processing frameworks. avro helps define a binary format for your data, as well as map it to the programming language of choice. Avro producer avro consumer: we will discuss two ways to consume the messages. cli. we will use cli to consume the messages from kafka topic. to do this, we need to log into our “schema registry.

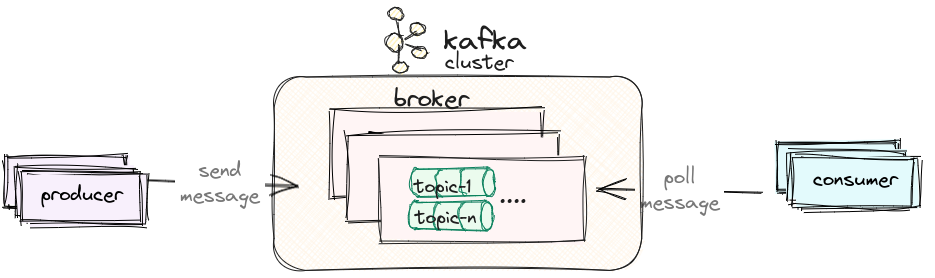

Avro Producer And Consumer With Python Using Confluent Kafka Stackstalk In this tutorial, we will learn how to write an avro producer using confluent’s kafka python client library. the script we will write will be executable from the command line and takes a few arguments as its input. writing it as a command line executable gives us the flexibility to call it from anywhere we want. # get the schema to use to serialize the message schema = parse(open(filename where you have your avro schema, "rb").read()) # serialize the message data using the schema buf = io.bytesio() encoder = binaryencoder(buf) writer = datumwriter(writer schema=schema) writer.write(myobject, encoder) buf.seek(0) message data = (buf.read()) # message. Kafka version 0.10 from the confluent docker repo; zookeeper from wurstmeister's docker repo; my own docker image with the new python client from kafka (confluent kafka) and avro python3; simple producer and consumer scripts modified from cuongbangoc's upstream repo. Avro producer and consumer with python using confluent kafka in this article, we will understand avro a popular data serialization format in streaming data applications and develop a simple avro producer and consumer with python using confluent kafka.

Create Avro Producers For Confluent Kafka With Python By Mrugank Ray Kafka version 0.10 from the confluent docker repo; zookeeper from wurstmeister's docker repo; my own docker image with the new python client from kafka (confluent kafka) and avro python3; simple producer and consumer scripts modified from cuongbangoc's upstream repo. Avro producer and consumer with python using confluent kafka in this article, we will understand avro a popular data serialization format in streaming data applications and develop a simple avro producer and consumer with python using confluent kafka. Confluent schema registry for storing and retrieving schemas ()confluent schema registry is a service for storing and retrieving schemas. apparently, one can use kafka without it just having schemas together with application code but the schema registry ensures a single source of truth for versioned schemas across all the consumers and producers excluding the possibility of schemas deviation. From confluent kafka.serialization import serializationcontext, messagefield from confluent kafka.schema registry import schemaregistryclient from confluent kafka.schema registry.avro import avrodeserializer.

Stream Processing With Python Part 2 Kafka Producer Consumer With Confluent schema registry for storing and retrieving schemas ()confluent schema registry is a service for storing and retrieving schemas. apparently, one can use kafka without it just having schemas together with application code but the schema registry ensures a single source of truth for versioned schemas across all the consumers and producers excluding the possibility of schemas deviation. From confluent kafka.serialization import serializationcontext, messagefield from confluent kafka.schema registry import schemaregistryclient from confluent kafka.schema registry.avro import avrodeserializer.

Comments are closed.